Deepfakes and Synthetic Media: Summary and Highlights

(Tuesday, April 2, 2024) All Tech Is Human was privileged to produce Deepfakes and Synthetic Media, a livestream featuring a world-class panel of experts discussing the threats deepfakes and synthetic media pose to our world.

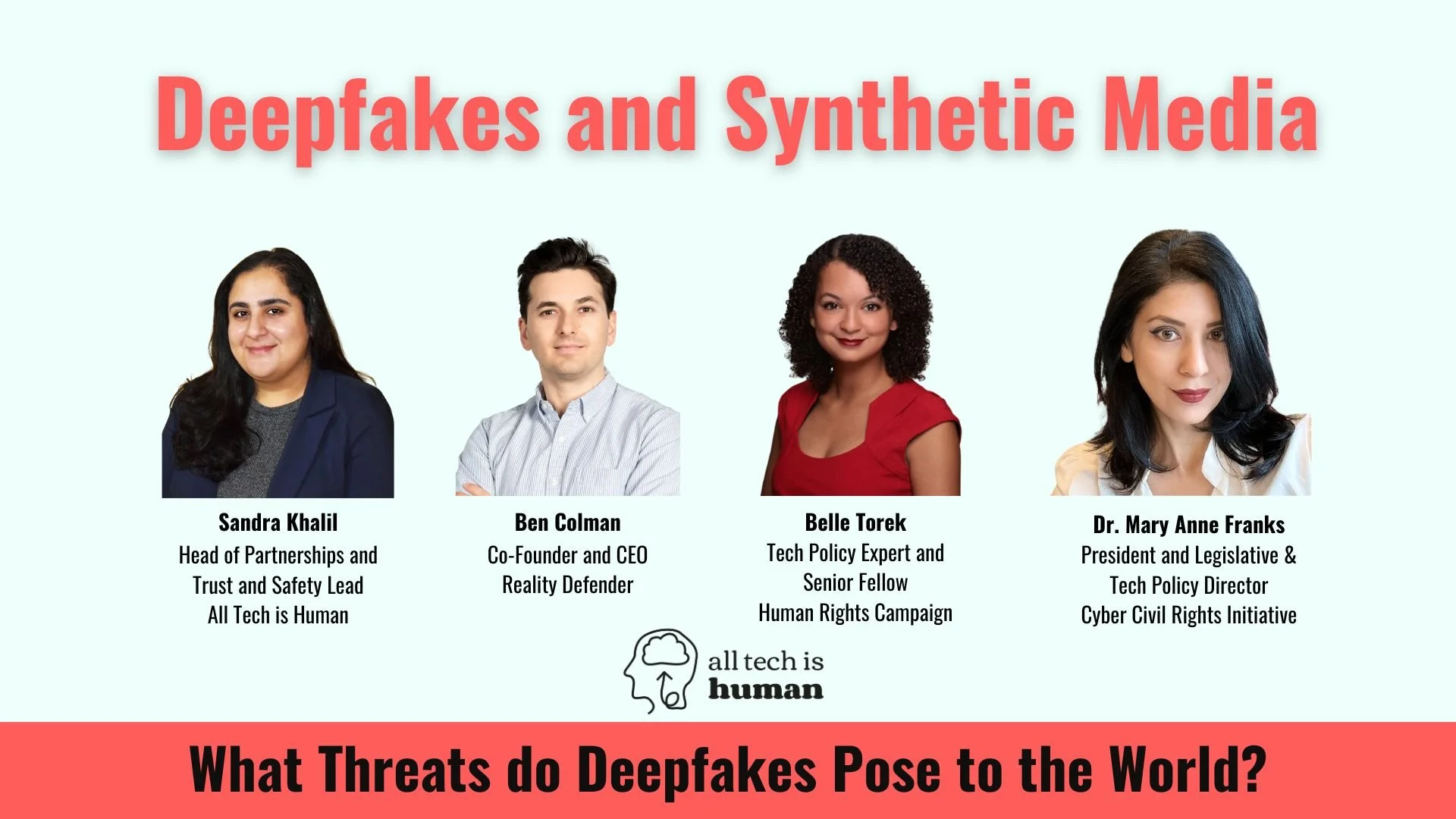

Deepfakes and Synthetic Media was hosted by All Tech Is Human Head of Partnerships and Trust & Safety Vertical Sandra Khalil, and featured Dr. Mary Anne Franks (President and Legislative & Tech Policy Director, Cyber Civil Rights Initiative), Belle Torek (Tech Policy Expert & Senior Fellow, Human Rights Campaign), and Ben Colman (Co-Founder and CEO, Reality Defender).

The panel discussed…

The differences between deepfakes, cheap fakes, and shallow fakes

Motivation to address the ongoing harms of deepfakes and synthetic media

The ways deepfakes and synthetic media harm marginalized communities

How deepfakes and synthetic media inform the larger information ecosystem

The detrimental impacts of deepfakes and synthetic media on women and girls

The legal bottlenecks associated with tackling these issues.

Below, you will find a selection of lightly-edited excerpts from the panel. To view the full livestream, click here.

What is the difference between a deepfake, cheap fake, and shallow fake?

Dr. Mary Anne Franks:

The term deepfake was coined by a Reddit user back in 2017, and it's kind of a portmanteau between deep learning — machine learning and some kind of artificial intelligence dimension — combined with the word fake. To produce something that looks real, but isn't real.

The distinction between a deepfake and a so-called cheap fake or a shallow fake is there are lots of ways of manipulating photos to make them seem real even though they're fake that don't involve that kind of machine learning. That kind of sophisticated or formally sophisticated technology involves things like Photoshopping or retouching, or even not really technology such as false captioning or slowing down a video so that it makes somebody look as though they might be slurring their speech.

The basic distinction is that these are presentations. They can be either visual or audio. And they are good enough to fake to fool a reasonable person to think what is being depicted is accurate when, in fact, it isn't. The distinction is between the sophisticated deepfake machine learning type as a deep fake or digital forgery versus a shallow fake or a cheap fake, which is other forms of manipulation.

What motivated you to undertake the work of identifying deepfakes?

Ben Colman:

I lived my life at the intersection of cybersecurity and data science, building tools and technology that could protect companies and consumers against digital digital threats.

As far as a company, we have the privilege of being the category leader in the deepfake and generative AI fraud space, but we only support companies and governments right now. That's for two main reasons, which we hope to change. One is that computational power is very expensive and we need to cloud compute. We can't get run these locally on a laptop or an iPhone. Also, we have no clear regulations requiring new platforms to do anything yet.

We see a future where the platforms themselves were responsible for detecting this type of AI fraud and not forcing the consumers, average people, our friends, our parents, to try and become experts, something which is fundamentally impossible. I don't expect people to be able to check code for ransomware or an APT or a computer virus. Similarly within general media, a human cannot tell the difference. You need AI to detect AI.

What advocacy work is being done in this space?

Belle Torek:

We've spent years studying the nonconsensual distribution of intimate imagery, also termed nonconsensual pornography or revenge porn, which is the act of sharing sexually explicit imagery, typically photos and videos, without a subject's consent.

We have come to realize that the vast majority of deepfake videos, between 96 and 98 percent of them, are sexual in nature and are nonconsensual pornography. When we saw Taylor Swift, a powerful, renowned, respected women in the world being a target of this kind of pervasive harm, we realized immediately, “Well, if Taylor Swift can't protect herself against these kinds of harms and abuses, who among us can?”

We're really thankful to have seen Swifties and Swift's legal team and all of the people who kind of coalesce around Taylor side with her to take down as much abusive content as possible. But for most people, the threat of legal recourse doesn't loom as heavy as Taylor Swift's legal team. We found this alarming and we wanted to draw attention to the fact that right now legal recourse for these harms are inadequate.

How does synthetic media harm marginalized communities?

Nonconsensual deepfakes and and harmful synthetic media on the whole are such an intersectional issue. On the one hand, we see nonconsensual pornography and it's disparate, overwhelming impact on women. We think about it within the context of gender-based violence. I think about it through a slightly more intersectional lens at the Human Rights Campaign, where I also think about not just women, but folks who are gender expansive, nonbinary, and the ways in which we think about women and maybe exclude members of the transgender community.

We focus a lot on thinking about the ways in which AI and technology harmed the LGBTQ+ community, especially, amid increasing surging anti-LGBTQ+ legislation, and I'm based in Florida experiencing it firsthand, especially amid an election year. We're really concerned about the ways in which these harms not only impact members of the LGBTQ+ community, but also democracy at large and lead to bad outcomes for everyone.

One of the things that Ariana would have been able to speak about is the impact of these harms, particularly on intersectional and multiply marginalized communities. All of this to say that, maybe a black trans woman who is the victim of a harmful deepfake could experience more significant harms with less access to recourses than maybe a white male counterpart experiencing a comparable harm. When you think about low-income or disabled communities, these harms become even worse and more pervasive.

How do deepfakes intersect with the larger information ecosystem and the spread of disinformation?

Dr. Mary Anne Franks:

What we generally see with image-based sexual abuse is women and girls tend to be disproportionately targeted. Sexual minorities tend to be disproportionately targeted, and the resources available to those groups tend to be fewer and far between. And one of the lessons that we keep trying to emphasize at CCRI is that we've had iterations of technology being used against women and girls initially that are then used in much more sophisticated ways, not just against women and girls, but of course, against everyone in society.

Ben Colman:

Part of the challenge here is that a lot of folks put their hands up and say, “We don't have anything to solve for this.”

Our short answer is we do have something to solve for this. I'm not trying to pitch my own company. There's a few companies in the space and we can do it programmatically in real time. It's nothing new. Platforms, for example, social media or streaming platforms, they already follow regulations to scan for CSAM imagery of underage people to scan for violence or nudity. When you try and watch a clip on YouTube of a football game or that Super Bowl, a lot of times it says, please confirm you're over 18. Please confirm you want to see this because, while it's not nudity or violence, it's an injury. We look to other emergent regulations around scanning for the latest Drake song.

I's not a new form or an application. It's just another extension of different types of content moderation, which, unfortunately right now, no consumer platforms are actually focusing on to the extent they're actually reducing their impact. They're cutting their teams focused on trust and safety, cutting their teams focused on content moderation. And we see big companies, big banks, big media, and governments. [doing this]. I don't understand the problem because it actually affects dollars and cents. It affects lives. But the platforms that average people are using on the computer or on their phones aren't providing the minimum protection that they should.

What strategies have been most effective in mitigating the detrimental impacts of deep fakes that specifically target women and girls?

We need to have technology reform because a lot of times it's going to be the actors themselves within the tech industry that can do more and should do more regardless of what the law says. And then we also need to provide support to existing victims and try to educate the public to not become perpetrators.

We're trying all of those things here as well, because It nonconsensual pornography includes things like digitally manipulated images. We are once again promoting model legislation in terms of what should be prohibited. Our research has shown that if we're going to have effective legal prohibitions, they are going to have to include criminal penalties because [what exists now] may be useful for those victims who, maybe like Taylor Swift, have a legal team who have time, or have a team who can work on these issues, but litigation puts all of the burden on the victim. It requires money, it requires time, it requires access to a lawyer, and so by itself can never really serve as either a path for full justice for a victim, or really to deter someone from engaging in this abuse.

Our research has shown when we ask perpetrators. If there's anything that would have stopped them from engaging in this behavior, the answer overwhelmingly is, “If I thought I could go to jail, I wouldn't have done it.”

Not if I understood the harm, not if I could get sued, just if I would go to jail, I wouldn't do it.

Panelist Bios

Dr. Mary Anne Franks, Professor in Intellectual Property, Technology, & Civil Rights Law at George Washington Law School

Dr. Mary Anne Franks is the Eugene L. and Barbara A. Bernard Professor in Intellectual Property, Technology, and Civil Rights Law at George Washington Law School, where her areas of expertise include First Amendment law, cyberlaw, criminal law, and family law. Dr. Franks also serves as the President and Legislative & Tech Policy Director of the Cyber Civil Rights Initiative, the leading U.S.-based nonprofit organization focused on image-based sexual abuse. Her model legislation on the non-consensual distribution of intimate images (NDII, sometimes referred to as “revenge porn”) has served as the template for multiple state and federal laws, and she is a frequent advisor to the federal government, state and federal lawmakers, and tech companies on privacy, free expression, and safety issues. Dr. Franks is the author of the award-winning book, The Cult of the Constitution (Stanford Press, 2019); her second book, Fearless Speech (Bold Type Books) will be published in October 2024. She holds a J.D. from Harvard Law School as well as a doctorate and a master’s degree from Oxford University, where she studied as a Rhodes Scholar. She is an Affiliate Fellow of the Yale Law School Information Society Project and a member of the District of Columbia bar.

Ben Colman, Co-Founder and CEO, Reality Defender

Ben Colman is the Co-Founder and CEO of Reality Defender (www.realitydefender.com), the leading deepfake detection platform helping enterprises flag fraudulent users and content. Over the past 15 years, Ben has scaled multiple companies at the intersection of cybersecurity and data science. Prior to this, Ben led cybersecurity commercialization at Goldman Sachs, and worked at Google. He holds an MBA from NYU Stern and a bachelor's degree from Claremont McKenna College.

Belle Torek, Senior Fellow, Tech Advocacy at the Human Rights Campaign

Belle Torek is Senior Fellow, Tech Advocacy at the Human Rights Campaign, where her subject matter expertise informs HRC’s strategy to advance LGBTQ+ safety, inclusion, and equality across social media, artificial intelligence, and emerging technologies. She also holds affiliate roles with All Tech Is Human and UNC Chapel Hill’s Center for Information, Technology, and Public Life. Prior to HRC, Torek was Associate Director, Technology Policy to the Anti-Defamation League, where she served as an internal expert on issues including platform accountability, AI regulation, and First Amendment applications to online speech. Torek is a proud alum of the Cyber Civil Rights Initiative, where she supported legislative efforts to combat several forms of online abuse and collaborated with social media platforms on their hate and harassment policies. She is elated to participate on this panel with Dr. Mary Anne Franks, her cherished former professor and longtime mentor, who inspired Torek’s own tech policy journey.

About All Tech Is Human

All Tech Is Human is a non-profit committed to building the world’s largest multistakeholder, multidisciplinary network in Responsible Tech. This allows us to tackle wicked tech & society issues while moving at the speed of tech, leverage the collective intelligence of the community, and diversify the traditional tech pipeline. Together, we work to solve tech & society’s thorniest issues.

Join our working groups on Cyber & Democracy and Tech Policy

Read our reports, such as our Responsible Tech Guide and our recent Responsible Tech Org List 2024

Join our Slack community of over 8k members across 89 countries, with numerous individuals focused on creating healthy digital spaces

Read our Responsible Tech Job Board and join our talent pool